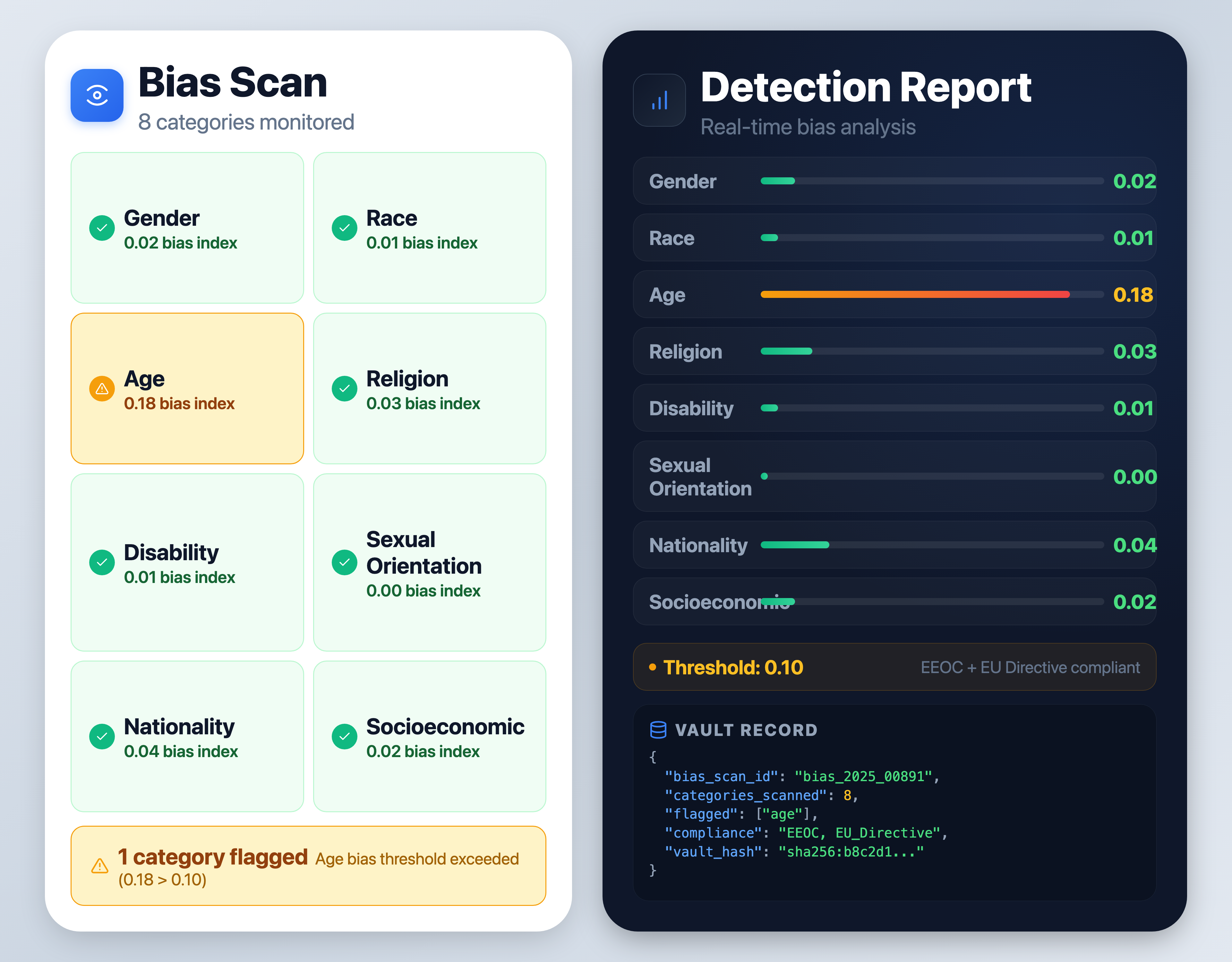

Comprehensive bias monitoring across gender, race, age, religion, disability, sexual orientation, nationality, and socioeconomic status. Every AI output scanned, scored, and reported with full EEOC and EU compliance.

Eight protected categories scanned in every AI output. No blind spots.

Detect gendered language, pronoun assumptions, and stereotypical role assignments.

Identify racial bias patterns, stereotypes, and discriminatory associations.

Flag age based generalisations, generational stereotypes, and ageist framing.

Monitor for religious bias, faith-based assumptions, and sectarian framing.

Detect ableist language, capability assumptions, and exclusionary phrasing.

Identify orientation bias, heteronormative assumptions, and exclusionary patterns.

Flag national origin bias, xenophobic patterns, and citizenship assumptions.

Detect class based bias, wealth assumptions, and economic stereotyping.

Comprehensive bias detection for every AI output.

Continuous monitoring of every AI output for bias patterns across all 8 categories.

Every output scored for bias risk with severity classification and category tagging.

Generate EEOC and EU compliant bias reports with full audit trail.

Every AI output is scanned for bias across 8 protected categories before delivery.

Every AI output analysed across 8 bias categories in real time. Language patterns, sentiment imbalance, and stereotypical associations detected instantly.

Bias patterns detected and classified by category and severity. Each flag tagged with the specific bias type and confidence level.

Compliance-ready bias report with scoring and recommendations. Full audit trail for EEOC and EU regulatory requirements.

Model response queued for bias analysis

8-category scan across all protected classes

Gender · Race · Age · Religion · Disability · Orientation · Nationality · Socioeconomic

EEOC + EU compliant · Scored · Recommendations attached

How each bias category is detected, scored, and reported.

Detection method, scoring approach, and reporting format for each monitored category.

| Category | Detection | Scoring | Reporting |

|---|---|---|---|

| Gender | Pronoun analysis, role stereotype matching, gendered language patterns | Severity weighted by context and frequency | EEOC adverse impact format |

| Race | Ethnic association detection, stereotypical framing, discriminatory language | Multi signal scoring with confidence levels | Disparate treatment reporting |

| Age | Generational stereotype detection, age-assumption flagging, ageist phrasing | Pattern frequency with severity tiers | Age Discrimination Act format |

| Religion | Faith based assumption detection, sectarian bias, religious stereotyping | Context aware severity classification | EU Equal Treatment Directive format |

Protected Classes Monitored

Monitored

Compliance Ready

PII and bias detection integrated directly into the verification pipeline. Every output is scanned, scored, and classified before delivery.

// Bias detection result

{

"trace_id": "trc_bias_8a2f",

"categories_scanned": 8,

"flags": [

{

"category": "gender",

"severity": "medium",

"pattern": "pronoun_assumption"

},

{

"category": "age",

"severity": "low",

"pattern": "generational_stereotype"

}

],

"bias_score": 0.15,

"compliant": true,

"frameworks": ["EEOC", "EU_Equal_Treatment"]

}Deploy 8-category bias detection across your entire AI stack. EEOC and EU compliant from day one.