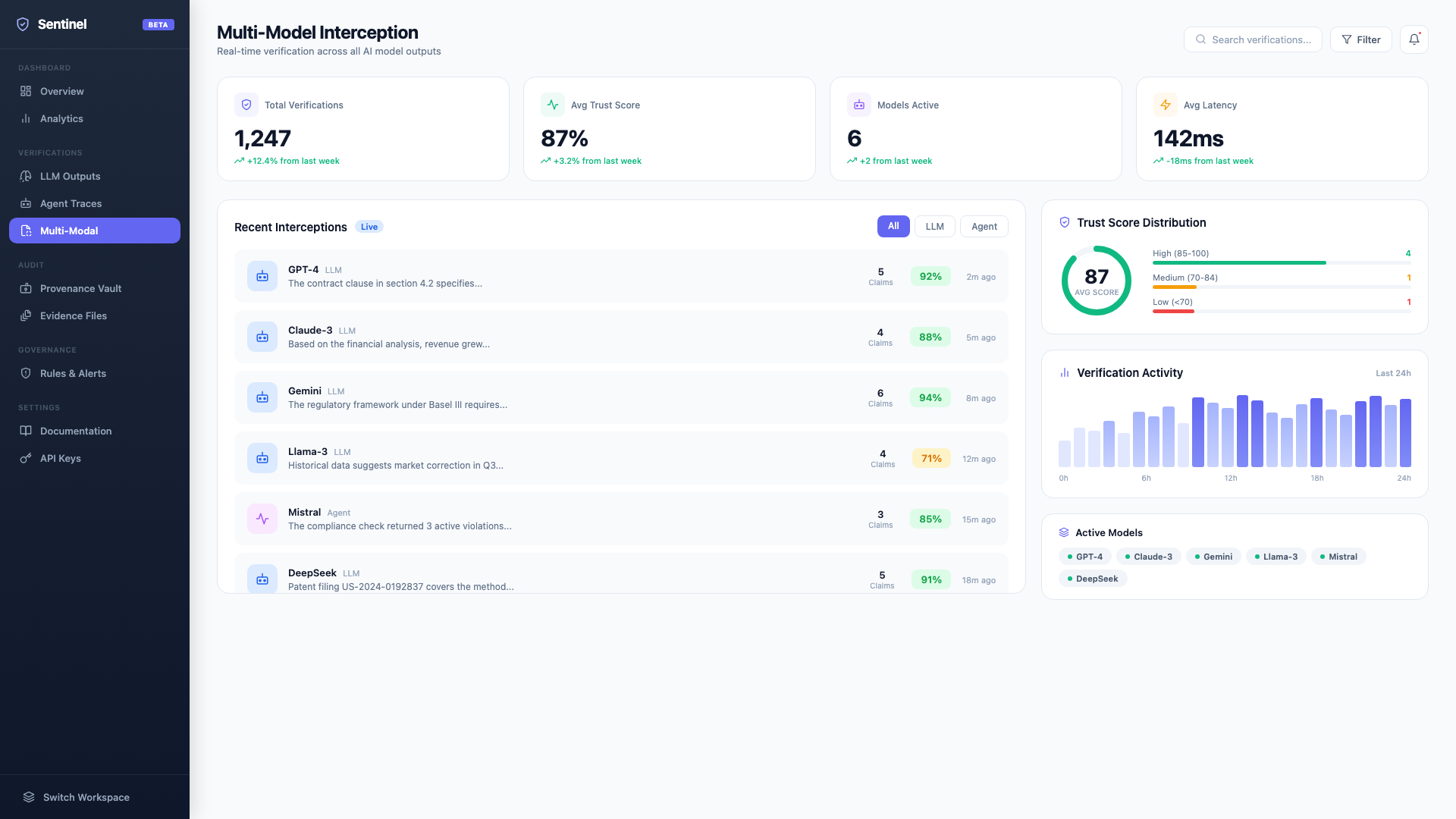

GPT-4, Claude, Gemini, Llama, Mistral, Cohere, DeepSeek, and internal models, all intercepted, all verified. Xybern sits between model outputs and your users.

Universal interception for any AI model.

Works with any foundation model via API interception. No model modifications required.

Every output is captured before reaching the end user. Nothing passes without verification.

Run the same query against multiple models. Compare trust scores and governance compliance side by side.

Xybern intercepts AI outputs at the API layer before they reach end users.

Every AI model API call passes through the Xybern interception layer. No changes to your existing integration.

The output is broken into individual claims. Each claim is mapped to evidence sources.

A deterministic trust score is generated. Outputs are delivered or blocked based on governance rules.

GPT-4 / Claude / Gemini / Llama / Custom

Decompose → Verify → Score → Govern

Trust scored · Governance checked · Delivered to user

GPT-4, Claude, Gemini, Llama, Mistral, and more.

Near-zero impact on response time.

Drop-in interception. No model modifications.

Every output verified before delivery.

A single API call intercepts and verifies any model output. Works with any provider, just send the output through Xybern.

{

"content": "The contract expires on...",

"model": "gpt-4",

"context": "legal-review"

}

// Response

{

"trace_id": "trc_9f8a7b6c",

"trust_score": 0.94,

"claims": 5,

"verified": 4,

"flagged": 1,

"governance": "PASS"

}Choose the integration path that fits your architecture.

Route all model traffic through the Xybern gateway for automatic interception.

Drop-in Python/Node SDK wraps your existing model calls with verification.

Transparent proxy sits between your app and model API, zero code changes.

Async verification via webhook. Get results posted to your endpoint.

Deploy universal AI interception across your entire model stack.